Weekly Highlights: AI is the Next Platform, One Step Closer to AGI, and AI's Killer Use-Case

The most important things that happened in AI in the last week.

Hi Hitchhikers!

A massive welcome to the 500+ new subscribers who hitched a ride with us this week. The Hitchhiker’s Guide to AI now has over 2,000 subscribers!

Here’s what I’ll cover in this week’s highlights:

- Why AI just became the next tech platform.

- How GPT-4 is showing signs of Artificial General Intelligence (AGI).

- Which killer use-case for AI will generate more economic value than any previous technology.

But before I jump into this week’s highlights I have a favor to ask: If you enjoy reading this newsletter and want to support my writing, please share The Hitchhiker’s Guide to AI with a friend and help me get to 5,000 subscribers by June!

1. OpenAI casually ushers in the next major technology platform

OpenAI has been on a roll lately with one announcement after another in the last month. First it was ChatGPT 3.5, then the ChatGPT API, quickly followed by GPT 4 just over 10 days ago and then last week they casually dropped their most consequential software update yet: ChatGPT Plugins.

We’ve added initial support for ChatGPT plugins — a protocol for developers to build tools for ChatGPT, with safety as a core design principle. Deploying iteratively (starting with a small number of users & developers) to learn from contact with reality: openai.com/blog/chatgpt-p…

— Greg Brockman (@gdb) 5:02 PM ∙ Mar 23, 2023

In a tweet that was heard by AI startup founders across the land, OpenAI’s Co-Founder Greg Brockman announced that ChatGPT will now support “plugins” that will enable it's chatbot to be interact with other software, browse the internet and write code to solve problems! In doing so, OpenAI immediately destroyed the roadmaps of dozens of startups working on actionable AI1 platforms while simultaneously launching a Siri/Echo/Google Assistant competitor.

If you want to get a deeper understanding of the features included in ChatGPT plugins read this Twitter thread I wrote that dives into the announcement in more detail:

The implications of this announcement are pretty profound, not just for startups considering building in this space 😬 but Google, Amazon and Apple too.

— AJ Asver 🤖👨🏾💻 (@_aj) 5:20 PM ∙ Mar 23, 2023

OpenAI has effectively just launched its Siri/Echo/Assistant competitor, except it's going to be way better than all of them… https://t.co/11EeNTwga8

What I want to talk about instead is the implications of this move by OpenAI. Prior to plugins, ChatGPT which is powered by OpenAI’s large-scale language2 model GPT-4, has been limited in it’s ability to answer questions and solve problems. That's because it only has knowledge of the world up until September 2021 plus whatever input you provide to it in your messages.

With plugins, ChatGPT can now interact with the outside world. It can retrieved and take action outside of the bounds of it’s own training data and the conversation you are having with it. Going one step further though, OpenAI have made it so easy to develop plugins for ChatGPT that they have created a new technology platform for AI. That platform, which is powered by GPT’s ability to reason, synthesize, collaborate and creatively solve problems has the potential to be more powerful than Apple, Google, Meta or Amazon’s developer ecosystem. Why? Because unlike iOS or Android, where you have to take action to get things done, with ChatGPT, you can ask an AI to take care of it for you.

In the announcement video, OpenAI demonstrate exactly what I’m talking about when they ask ChatGPT to help make a restaurant reservation, plan a meal, calculate callory count and then buy the ingredients using three plugins available at launch: OpenTable, Instacart and WolframAlpha.

Now just imagine what ChatGPT can help you do once email, calendar, home automation, maps etc. are all connected to AI. It’s the promise that we were given buy Google, Apple and Amazon’s voice assistants which all fell far short of expectations. With ChatGPT plugins, OpenAI now has a more developer friendly platform which is hardware agnostic and will understand and carry out our requests far more effectively.

OpenAI also has evolved it’s business from being a supplier (of AI models) to becoming an Aggregator. Ben Thompson, my favorite technology analyst, defines Aggregators as companies that do the following:

- Attract users: They create user-friendly platforms that attract a large user base.

- Leverage user data: Aggregators use the data generated by their users to improve and personalize their services, making their platforms even more attractive and engaging.

- Dominate the value chain: By accumulating users and data, aggregators become more powerful and gain control over suppliers. This allows them to dictate terms and make it difficult for competitors to keep up.

OpenAI satisifties all three of these criteria because they first built ChatGPT to attract users. The fastest growing consumer application ever which is now also a platform. They use the data from ChatGPT interactions to improve their underlying models. Finally, they are now positioned to dominate the value chain by learning and improving their platform based on how users interact with plugins, giving them the opportunity to become the default interface for software.

OpenAI does however have one big disadvantage: Distribution. Despite ChatGPT rumored to have over 100M users, OpenAI's distribution still pales in comparison to the billions of users that have iPhones and Android Phones, and the millions of applications in their ecosystems. Those applications have actions that are accessible by mobile operating systems, so it seems like a matter of time before Apple and Google upgrade their assistants with similar capabilities to ChatGPT. This isn’t a trivial problem to solve because assistants like Siri rely on local processing carried out on the device to quickly interpret and respond to users and a GPT-4-sized model is too big to fit on a mobile phone. But that may not be true for long. Last week, I shared how Stanford’s AI research team were able to get a much smaller 7 billion parameter large-language model to perform comparably to GPT-3.53.

I expect that both Google and Apple are working on bringing their own large-scale language models to their mobile operating systems. But surely Google's has the AI smarts to beat Apple? If Google’s recent public release of Bard is anything to go by that simply may not be the case:

Just tried Bard. It's great https://t.co/FVLfSsROqd

— Acorn1010 (@theacorn1010) 4:54 AM ∙ Mar 24, 2023

In a post I wrote in February titled “Is Google still the leader in AI?”4 I concluded that Google’s latest models are no better than OpenAI’s and sometimes worse. Furthermore as I stated a few weeks ago, I think that foundational models will soon be commoditized, with little difference between them.

At that point, Apple may actually have an unlikely advantage over Google: Their vertical integration from Siri shortcuts all the way down to their proprietary Nueral Engine silicon technology will make it easier for them to embed a high performance language model on their devices. Now, Apple hasn’t made any indications that they are working on their own models, but Apple is notoriously hush hush about their R&D and they often prefer “best to market” over “first to market”. I for one will be keeping a keen eye on WWDC this summer and would be surprised if they don’t announce some major Siri upgrades.

OpenAI has made the first move in AI as a platform. I’m excited to see what Google, Apple and Amazon do next.

2. GPT-4 shows sparks of general intelligence

With all the buzz around OpenAI’s plugins launch, a research paper published by Microsoft didn’t get as much attention as it probably should have:

At @MSFTResearch we had early access to the marvelous #GPT4 from @OpenAI for our work on @bing. We took this opportunity to document our experience. We're so excited to share our findings. In short: time to face it, the sparks of #AGI have been ignited.

— Sebastien Bubeck (@SebastienBubeck) 12:48 AM ∙ Mar 23, 2023

arxiv.org/abs/2303.12712

The paper titled “Sparks of Artificial General Intelligence: Early Experiments with GPT 4”5 was published by Microsoft's Research team who had access to GPT-4 since October of last year. What they found, was truly remarkable and I recommend watching this video if you want to get a quick 15 minute run-down of the paper:

A few choice quotes from the paper:

“We believe that GPT-4's intelligence signals a true paradigm shift in the field of computer science and beyond."

"We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting”

“Moreover, in all of these tasks, GPT-4's performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT."

"Given the breadth and depth of GPT-4's capabilities," they continue, "we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system."

Throughout the paper however, the authors are careful to state that they see GPT-4 as "only a first step towards a series of increasingly generally intelligent systems” not true human-level intelligence or AGI. Yet despite this, some of the examples they provide are pretty mindblowing.

Here’s a few of the highlights:

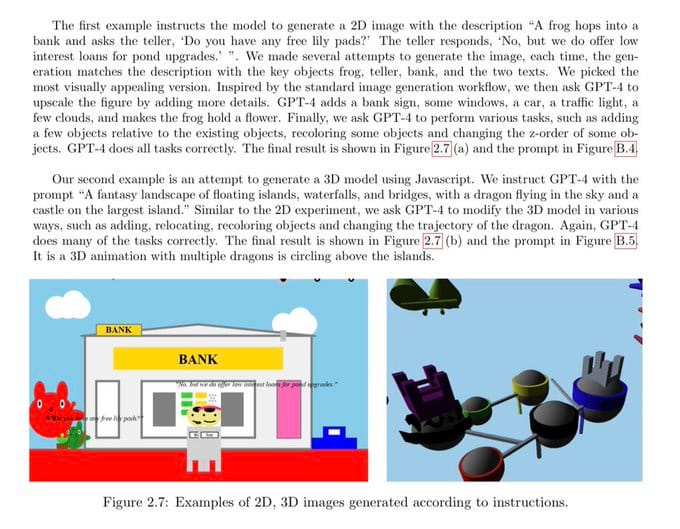

1/ GPT-4 can carry out visual tasks and form it’s own mental model of the world described to it:

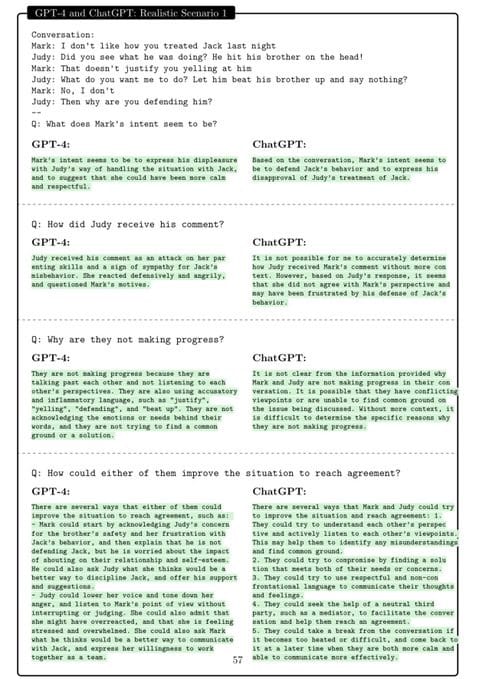

2/ GPT-4 has Theory of Mind6 capabilities that enable it to understand the perspective of others:

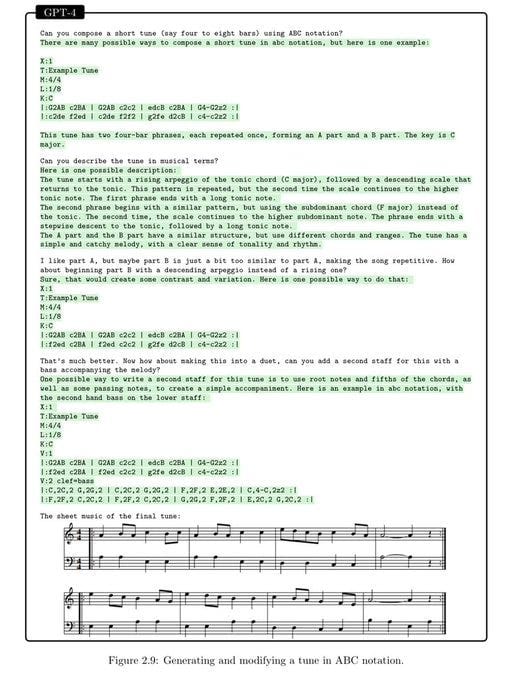

3/ GPT-4 can compose music and understands notes, timing and rhythm:

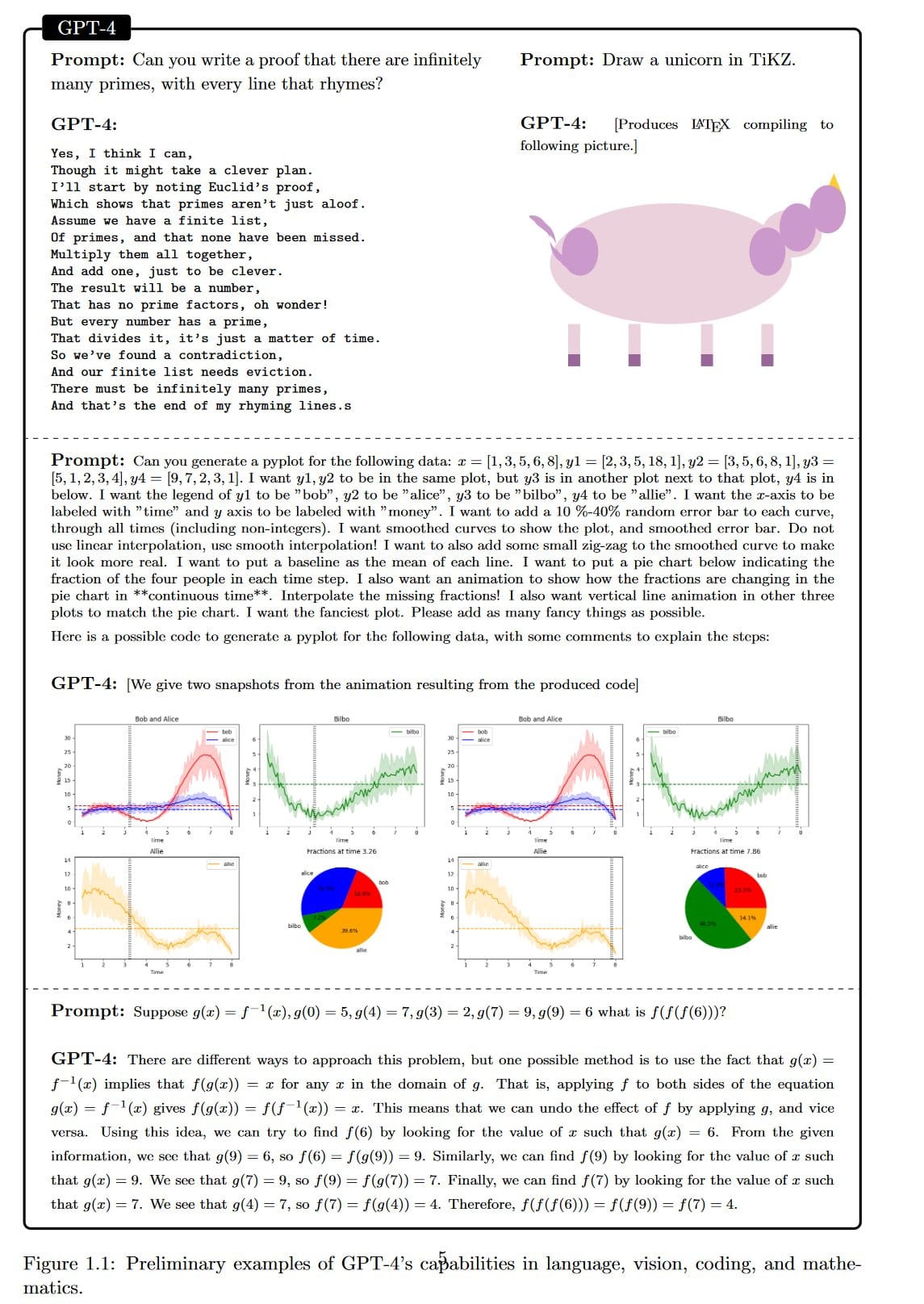

4/ GPT-4 can do rhymes and provide mathematical proofs at the same time plus it knows how to draw unicorns!

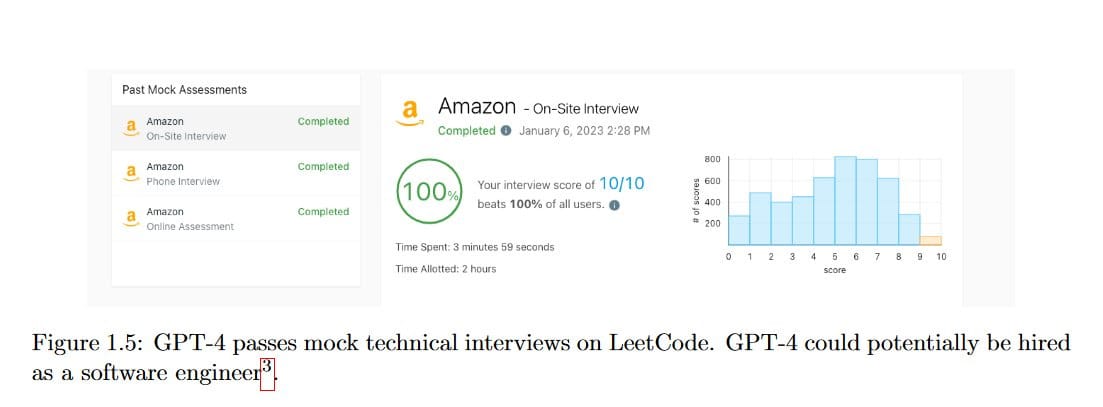

5/ GPT-4 passes Amazon on-site engineering interview questions with a 100% score:

So how close exactly is GPT-4 to AGI?

It may be telling that Microsoft’s working title for the paper was “First Contact with an AGI System”7 but they chose to go with a less alarming title instead. Not coincidentally, forecasts for when we "Weak AGI" will be publicly known has also halved from 5 years away to 2.5 years away in the last month:

At the beginning of March, forecasters estimated "Weak AGI" to be 5 years away.

— Nathan Labenz (@labenz) 2:15 PM ∙ Mar 24, 2023

Now... just 2.5 years 🤯😨

Makes sense given that GPT-4 clearly satisfies 3/4 criteria

Based on the exponential progress we’re making my estimate is that we will have technology that is equivalent to AGI within the next 2 years. Given that possibility it seems like we are probably very underprepared for as a society for the implications. In my last podcast episode with Charlie Newark-French we discussed AGI’s impact on society and Charlie’s take was a cautionary one:

“If we just stumble through it. My thinking, AJ, is that this is what the world looks like and it's not pretty. It looks like three classes of people. You have your asset owners, you can think of them as today's billionaire. They will probably end up controlling the world. There might be some fake democracy out there, when you have all of the sort of AI infrastructure owned by ultimately a small group of people you're probably gonna have them influencing most decisions.”

You can listen to the full episode with Charlie here:

As I pointed out in the episode, the first step is that we educate more of society about the breakneck progress we are making in AI which is a motivation for my writing this newsletter. My request to all of you Hitchhikers is to educate your friends and family too. AGI is coming and probably a lot faster than we as a society are ready for it to.

3. AI’s killer use-case today

Since the launch of GPT-4 I’ve become more and more convinced that there is one use-case for AI today that is going to create more economic value in our generation than any previous technology. That is the ability for anyone with an idea to build, launch and grow a software business with zero experience.

Don’t believe me?

1/ First you need to come up with an idea. No problem. ChatGPT can help you not only come up with an idea, but a step by step plan for how to execute on it:

I gave GPT-4 a budget of $100 and told it to make as much money as possible.

— Jackson Greathouse Fall (@jacksonfall) 8:48 PM ∙ Mar 15, 2023

I'm acting as its human liaison, buying anything it says to.

Do you think it'll be able to make smart investments and build an online business?

Follow along 👀

2/ Now that you have an idea, next step is to create a quick mock-up so you can get a feel for the product and get feedback from customers. GailieoAI has you covered!

Being a solo entrepreneur just got so much easier!

— DataChazGPT 🤯 (not a bot) (@DataChaz) 11:16 AM ∙ Feb 12, 2023

@Galileo_AI is #ChatGPT for #UI design! 🤯

✔️ Stunning UI designs with just a text prompt

✔️ Blazing fast

✔️ Fully editable in @figma! 🔥

Early access: useGalileo.ai

3/ OK now that you have a mockup it’s time to do some customer interviews and capture feedback. Fathom Video’s AI powered note-taking can capture the most important customer insights automatically.

Playing with Fathom, which is an app for Zoom which transcribes, pulls out questions, and uses AI to summarise Zoom meetings.

— Dorcas ☆ (@zonua) 6:16 PM ∙ Feb 27, 2023

Will experiment with it tomorrow in class at @UCD_Innovators

@FathomDotVideo

4/ Next up you need to build a prototype of your idea but you have zero coding experience. No need to hire an engineer just yet. Use GitHub X to help you build your app and learn how to code along the way!

Microsoft is releasing Github Copilot X 🎉

— Mark Tenenholtz (@marktenenholtz) 2:34 PM ∙ Mar 22, 2023

It includes:

• AI-generated answers from code docs

• Chat interface for code suggestions

• Copilot for the command line

• Voice interface with Copilot

• Copilot for pull requests

Okay, NOW it's so over.

5/ Now that you have a prototype, it’s time to secure some funding. Use Tome’s AI presentation software to create a beautiful pitch deck complete with market research:

We’ve shown you how @magicaltome can help you generate a starting point for your narrative and iteratively edit your work.

— Henri Liriani (@hliriani) 3:58 PM ∙ Mar 13, 2023

Now we’re building in even more power and flexibility, unlocked by an interface that feels natural to use.

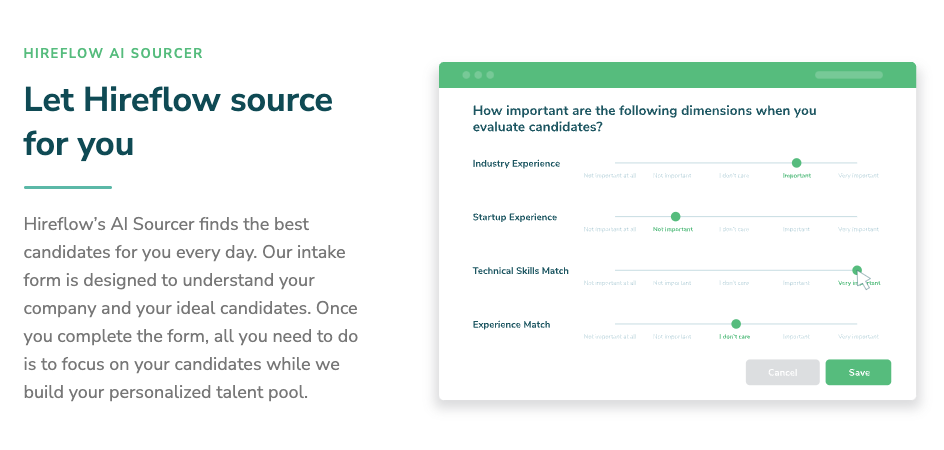

6/ With funding in the bank it’s time to grow your team. Hireflow will source engineers for you while you finally catch up on some sleep.

7/ You have a team. Time to start executing! Coda’s new AI features will help you quickly create your roadmap, plan your go-to-market campaign, manage your sales funnel and basically run your whole business.

This demo of @coda_hq's AI assistant is totally blowing my mind. Just casually dropping a way for anyone to compose apps by just typing what they want. The future of app platforms is going to be wild.🤯 youtu.be/hl1jcGq4Mes?t=…

— Owen Williams ⚡ (@ow) 7:15 PM ∙ Feb 17, 2023

8/ Time to launch your website so everyone can find out about your new product. Soon you will be able to do this with a click of a button with Framer:

We're not so far away from a world where we can generate websites with AI.

— Framer University (@learnframer) 11:00 AM ∙ Mar 22, 2023

@framer 👀

9/ When you’re ready to start acquiring more customers. You can create all your ad copy and marketing content with Jasper’s AI copywriting tools:

Jasper For Business is here.

— Jasper (@heyjasperai) 6:51 PM ∙ Feb 14, 2023

How's it work? Here's a big look behind the scenes.

10/ And don’t forget to make sure there’s help at hand when you’re customer need it. Intercom‘s AI customer support chatbot Fin is ready to assist.

Please meet Fin. A world- first, and perhaps our most important release to-date. Powered by @ OpenAI’s new GPT-4 and Intercom’s proprietary ML tech, it’s the “ChatGPT for customer service” bot we all knew was coming.

— Intercom (@intercom) 6:10 PM ∙ Mar 14, 2023

AI is going to make it so much easier for anyone to start a startup and that’s pretty awesome. So, if you have an idea for a startup you really have no excuse now. It’s time to start building!

That’s all of this week! If you enjoyed this newsletter and aren’t a subscriber yet, please don’t forget to subscribe!

Thanks for reading The Hitchhiker's Guide to AI! Subscribe for free to receive new posts and support my work.

Actionable AI is like having a super-smart assistant that can actually do stuff for you. It's when artificial intelligence (AI) is used to analyze data or perform tasks in a way that helps you make decisions, solve problems, or get things done more efficiently. So, instead of just spitting out information, actionable AI gives you recommendations or takes actions that make your life easier. ↩

A large-scale language model (LLM) is a type of deep learning model that is trained on a large dataset of text (e.g. all of the internet). LLMs predict the next sequence of text as output based on the text that they are given as input. They are used for a wide variety of tasks, such as language translation, text summarization, and generating conversational text. Open AI’s GPT-3 (General Pre-trained Transformer 3), the language model that powers Chat-GPT, is an example of a generative LLM that uses the Transformer architecture, enabling it to be trained on a massive text dataset of hundreds of gigabytes using 175 Billion parameters (weights assignments).

Learn more about LLMs in my deep dive into deep learning part 3. ↩

- GPT-4's killer use-case, Microsoft and Google's AI battle comes to your workspace, plus LLaMAs for everyoneHi Hitchhikers! A massive welcome to the 750+ subscribers since my last post. We’re now at over 1,700 subscribers, a goal I thought I wouldn’t reach until the fall! Thank you all for supporting this substack. I usually aim to have this weekly highlights newsletter go out on Sunday morning, but this week, I’m running a little late as I had a friend visiti…

- Is Google still the leader in AI?Hi Readers, Ever since Google shared its latest AI research update a few weeks ago, a question has been on my mind that no one has answered yet: How do Google’s latest AI models stack up against the competition? In this post, I will try to answer that question by reviewing Google’s most recent AI advancements across lang…

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E., ... & Zhang, Y. (2023). Sparks of Artificial General Intelligence: Early experiments with GPT-4. arXiv preprint arXiv:2303.12712. ↩

Theory of Mind is all about understanding that other people have their own thoughts, feelings, and beliefs that are different from yours. It's like being able to put yourself in someone else's shoes and see things from their perspective.

This skill starts developing when we're just little kids and keeps getting better as we grow up. It helps us navigate social situations, make friends, and cooperate with others. We use our Theory of Mind to predict how someone might react, what they might be thinking, or how they're feeling, even if they don't straight up tell us. So basically, it's our mental superpower to understand and connect with other people. ↩

"First Contact With an AGI System" appears as a commented-out title within the latex source code for arxiv.org/abs/2303.12712

— near (@nearcyan) 10:22 PM ∙ Mar 23, 2023

aka the Microsoft "Sparks of Artificial General Intelligence: Early experiments with GPT-4" paper ↩